We saw in one of our last blogs that containers with deterministic behavior (real-time) can be rolled out using Kubernetes. This provides the basis for building a deterministic, i.e. real-time-capable network. For the network, we use the standards for the Time Sensitive Network (TSN). To answer the question what is TSN à see the following box. The overriding aim of TSN is to exchange time-critical data and other L2 or IP data in parallel in an Ethernet-based network.

Time-Sensitive Networking (TSN) refers to a series of standards that the Time-Sensitive Networking Task Group[1] (IEEE 802.1) is working on. The majority of the projects define extensions to the IEEE 802.1Q bridging standard. These extensions primarily address transmission with very low transmission latency and high availability. Possible areas of application are convergent networks with real-time (audio/video) streams and, in particular, real-time control streams, which are used, for example, in industrial plants for control, automobiles or airplanes.

The key components of TSN technology can be divided into three basic categories. Each of the sub-standards from the different categories can also be used individually, but a TSN network only achieves the highest possible performance when used as a whole and by utilizing all mechanisms. These three categories are

1) Time synchronization: All participating devices require a common understanding of time

2) Scheduling and traffic shaping: All participating devices work according to the same rules when processing and forwarding network packets

3) Selection of communication paths, reservations and fault tolerance: All participating devices work according to the same rules when selecting and reserving bandwidth and communication paths

[1] Source: https://de.wikipedia.org/wiki/Time-Sensitive_Networking, October, 11, 2023

The open Ethernet standard is widely used both in IT and in the automation world and allows both the simple connection of devices and the simple scaling of networks. And it is based on standardized, cost-effective hardware. It should be noted that Ethernet was not initially defined as a real-time-capable protocol.

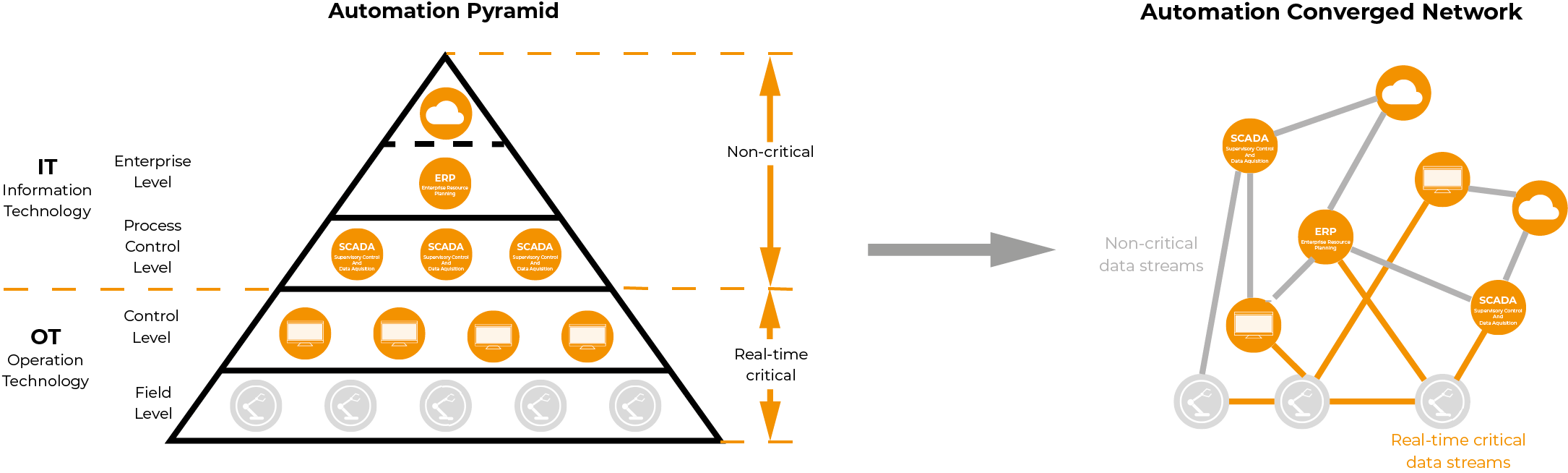

However, in automation and other areas, such as audio and video, guaranteed deterministic data transmission is required. This is why proprietary protocols such as EtherCAT, Profinet IRT etc. were defined to give Ethernet these additional properties. However, this also created a separation between the IT (information technology) and OT [Operational Technology refers to the use of hardware and software to control industrial devices and systems.] worlds and thus introduced expensive duplicate structures.

TSN is now the path to a standardized infrastructure that allows data to be exchanged from the sensor to the IT ("cloud") while complying with both industrial and IT requirements. In terms of real-time capability, this means cycle times of well under 1 millisecond, and in some cases significantly less. This fundamentally changes the automation pyramid to an open, end-to-end networked system (see Figure 1)

TSN is already supported in Linux. For example, the TAPRIO Qdisc implements timeslots in accordance with IEEE 802.1Qbv and the option of assigning network traffic to the corresponding traffic classes and timeslots based on their socket priority. Linux PTP is an established solution for time synchronization. The Preempt-RT patch is required for the correct functioning of the overall system so that the deterministic can also be guaranteed.

TSN is already supported in Linux. For example, the TAPRIO Qdisc implements timeslots in accordance with IEEE 802.1Qbv and the option of assigning network traffic to the corresponding traffic classes and timeslots based on their socket priority. Linux PTP is an established solution for time synchronization. The Preempt-RT patch is required for the correct functioning of the overall system so that the deterministic can also be guaranteed.

And what about the containers?

Linutronix has examined this. A corresponding container network interface (CNI) plugin is used to connect the real-time applications in Kubernetes containers to the TSN network. There are a whole range of options for this, of which we have evaluated the following:

- Host-Device

- MACVLAN and

- Bridge

With Host-Device, the container is given complete control over the network card. Neither the host nor other containers can access the network card and generate network traffic. The same latencies can be expected as natively without containerization. With special network cards (e.g. with SR-IOV [Single Root I/O Virtualization] support), it is nevertheless possible to connect several containers via one network port.

With MACVLAN, each container receives its own virtual network interface with its own MAC address. In contrast to Host-Device, the network traffic is routed through a special driver in the host kernel, the host retains full control over the network card itself and several containers can each be assigned their own virtual network interface.

With the Bridge CNI, a complete virtual switch (L2 Bridge) is instantiated in the Linux kernel. Each container is assigned a so-called veth pair consisting of two virtual network interfaces. One of these interfaces is provided to the real-time container, while the other is connected to the virtual switch. Among other things, this allows more flexibility when setting up the network topology, but has the disadvantage that a network packet has to pass through the Linux network stack several times, with corresponding effects on latencies.

To investigate the effects, we set up a small Kubernetes cluster with two directly connected nodes. The clocks were synchronized using PTP. The time it took for a packet from an application in the controller on one node (or directly on the host in the case of "Native") to reach the application in the other cluster was measured and repeated 1000 times.

Two different socket types were examined: With AF_INET, only the user data is passed to the Linux kernel and the UDP, IP and Ethernet headers are appended by the kernel. With AF_PACKET, the complete network packet is assembled in the application and only then passed to the kernel, which means greater complexity for the application, but makes it possible to achieve lower latencies.

The results are shown in Figure 2. As expected, the AF_INET latencies are consistently slightly higher than the corresponding AF_PACKET latencies. The host-device latencies do not differ significantly from those measured natively without the container, which was to be expected as the container accesses the network card directly. The latencies for MACVLAN are slightly higher, but in practice the difference is probably negligible. With the virtual bridge and especially in the AF_INET case, the latency is up to 113 µs in extreme cases. For many TSN applications, however, this is still completely acceptable.

Figure 2: Latencies

This simple structure already demonstrates that time-sensitive data transmission with and without containers is possible under Linux. A whole range of other optimizations are possible that were not used in this demonstration setup, such as Zerocopy using AF_XDP, which means that Linux can also be used for particularly time-critical applications, for example in the motion control sector.

Conclusion: Linux with Preempt-RT Patch enables a deterministic, real-time capable operating system. And this property remains guaranteed, even if the OS is running in the guest (assuming a hypervisor that supports real-time). And in the container, which is based on this OS, too.

If you now combine the real-time-capable OS with a real-time-capable network solution such as TSN, you get a standardized system that can exchange data from the sensor to the ERP system (e.g. SAP or Oracle) without system interruptions. If you combine this with OPC UA (Pub / Sub), data exchange is possible without any proprietary restrictions.